5 Failures in Test Automation – and Best Practices for Tackling Them

Too many test automation projects fail – and often for predictable reasons. To help you avoid these pitfalls, we have condensed the most common issues into this short guide along with related best practices for achieving success with test automation.

1. Going for 100% test coverage with automation

Remember: Test automation does not mean completely automated testing. One of the primary test automation benefits is to free up time for testing that must be done manually, i.e. testing unstable features, exploratory testing, etc. In other words, it’s about applying testers’ skills to where they provide the most value. Automated functional UI testing does not equal 100% test coverage, however it’s our experience that by utilizing automation effectively, teams can come very close to achieving their desired degree of test coverage.

Best practice: Plan for gradually automating your test suite. We recommend starting out by focusing on the flows that are easiest to automate. In most cases, you will find that it’s the relatively simple and very repetitive flows that, by far, take up most of your testing time.

2. Testing for multiple things in one test case

A test case that is built to verify more than one aspect can fail in multiple ways, for that very reason. This means that if the test case fails, it must be manually inspected to figure out which aspect failed.

Best practice: Build test cases so that they logically only test one aspect. This way, there is no doubt about what goes wrong when a test case fails. Instead of bundling up multiple tests in one test case, it is best practice to build reusable components with your test automation tool. This way, it is easy to reuse the logic contained in other test cases, and the time required to create a new test case is minimized.

3. Attempting to execute hundreds of test cases in an exact and predefined order

As mentioned above, each test case should test for just one thing. The “atomic” ideal of testing dictates that each single case can run on its own without being dependent on other cases, and that the order in which cases are run should not matter. The reason being, that if your test suite of hundreds of tests must be run in a certain order, and one of the test cases fail, then you must run the entire suite again when re-testing. And again, identifying the error would require manual inspection. This is obviously very inefficient.

Furthermore, if test #53 in an interdependent sequence of 100 tests fails, how can you trust the results of tests #54-100?

When it comes to test automation, we sometimes see test teams building and scheduling automated test cases with the mindset that they make up a sequence to be executed in a certain order. This approach works against the benefits that come with test automation; flexibility, agility, etc.

Best practice: Build automated test cases that are independent and self-contained. This way, they can all be scheduled at once to be executed anytime and in parallel, i.e. across different environments.

4. Not ensuring collective ownership of test automation

Introducing an automation tool comes with the risk that a long learning curve or technical challenges take up too much time and require a lot of support between team members. This takes away time and focus from testers’ primary tasks (building test cases, analyzing requirements, reporting, etc.).

Most automation tools require users to program, which, for the teams using these tools, widens the divide between technical testers, who can code, and “non-technical” testers who can’t. The typical scenario is that the technical team members are being charged with implementing test automation, with little or no ownership shared with other team members.

This split in a team can cause several issues:

- If only a few members own automation, then they are the only ones who understand and can maintain the automated test cases, as well as analyze the results from automated tests.

- If the automation “owners” leave the company, they leave behind a suite of test cases that no one can use. Time must then be allocated to rebuilding these test cases.

- By letting only “technical” testers work with automated tests, there is a risk of keeping testers with highly specialized domain knowledge out of the loop. These people have expert knowledge about the application under test and can provide valuable insights when preparing test cases for automation.

Best practice: Remember that success with automation is dependent on a team’s collective knowledge. Adopt a test automation platform that all testers can work with, so that automation becomes a natural part of the daily work of all team members.

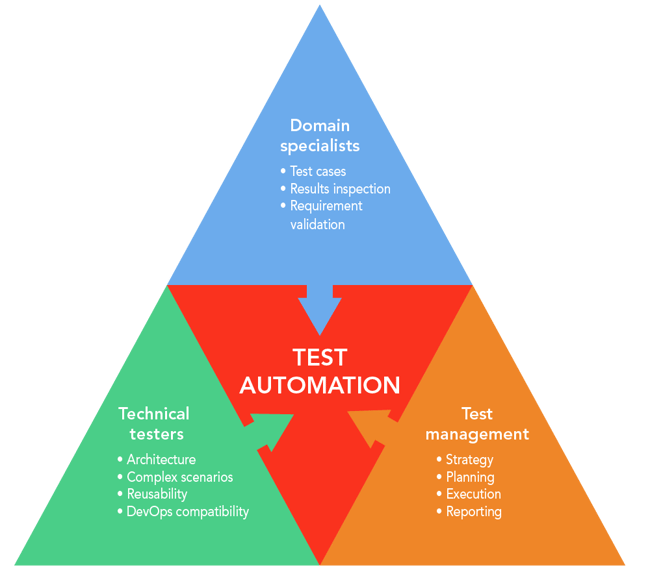

All members of a test team, incl. domain specialists, technical testers, and test management

play an important role in making test automation work.

5. Using a tool that is not a good technical fit

When looking for a test automation platform, make sure it fits your technical requirements. This includes installing the tool in your environment and letting potential users trial it with your own software.

A common mistake is to choose a tool based only on your current challenges and automation objectives. This comes with the risk of being stuck with a tool that is not a good technical fit for your organization in the long run.

Best practice: Implementing test automation is a long term strategic choice and should be handled as such. When evaluating automation tools, look across your organization and identify all the applications and technologies that could be potential targets for automation. Identify the scenarios where test cases need to move between technologies, e.g. both web and desktop applications, and select an automation platform that has matching capabilities.

Download Test Automation Strategy: A Checklist

Start developing your own test automation strategy and avoid common failures in test automation: