An Introduction to Data-Driven Testing

There are many methods for implementing software testing. However, not all require the same effort for test creation and maintenance. If you need to run the same tests, but with different parameter values, then you can do this through data-driven testing.

What is data-driven testing (DDT)?

Data-driven testing (sometimes abbreviated to DDT) is a software testing methodology where test data is stored in external data sources like spreadsheets, databases, or CSV files, rather than hard coded into the test case, allowing the same test logic to be executed multiple times with different sets of data.

When using a data-driven testing methodology, we create test scripts or test flows to be executed together with their related data sets in a test automation framework or tool.

The goal of this is to separate test logic from test data, creating reusable tests where testers can run the same test with multiple sets of data, and thereby improving test coverage and reducing maintenance.

Data-driven testing is used in conjunction with automated testing, which can read data from external sources and execute test cases automatically.

What are the benefits of data-driven testing?

Through data-driven testing, you can execute the same test with different data combinations. By doing this you can generate tests at scale that are easier to maintain. This comes with a number of benefits:

- Reusability: By isolating data from tests, the same test can be reused for different scenarios, reducing the time and effort spent on maintenance.

- Maintainability: Test data can be updated without modifying the tests, making maintenance simpler and less error-prone.

- Scalability: Data-driven testing allows for the testing of multiple scenarios in one test, making it easier to scale tests.

- Enhanced test coverage: With automated data-driven testing, it becomes possible to test more scenarios in less time, allowing teams to cover more tests with each release.

- Consistency and accuracy: When handling large volumes of data and repetitive tasks, even the most meticulous manual tester can make typing errors. Automated data-driven testing eliminates these mistakes by using precise data values from the data source.

Common challenges of data-driven testing

Data-driven testing, while powerful, can present some challenges.

When using code-based automation tools, a primary obstacle is the necessity for coding expertise.

Creating and maintaining test scripts for diverse data sets can be complex and prone to errors.This can limit the team's efficiency in building and maintaining tests as well as the quality of the tests, as the effectiveness heavily relies on the automation team's skill level.

On the other hand, no-code tools simplify the process by providing a more intuitive interface that allows testers to get a comprehensive overview of the test cases and make adjustments more easily.

Tools aside, data-driven tests are only as good as the quality of the data used; poor data quality can lead to inaccurate test results and undermine the entire testing process. The test quality hinges on the team's ability to configure the tests correctly and the integrity of the data.

Data validation, especially with large volumes of data, is another significant challenge, as it is a time-consuming process that requires meticulous attention to detail to ensure accuracy.

Real-world examples of data-driven testing

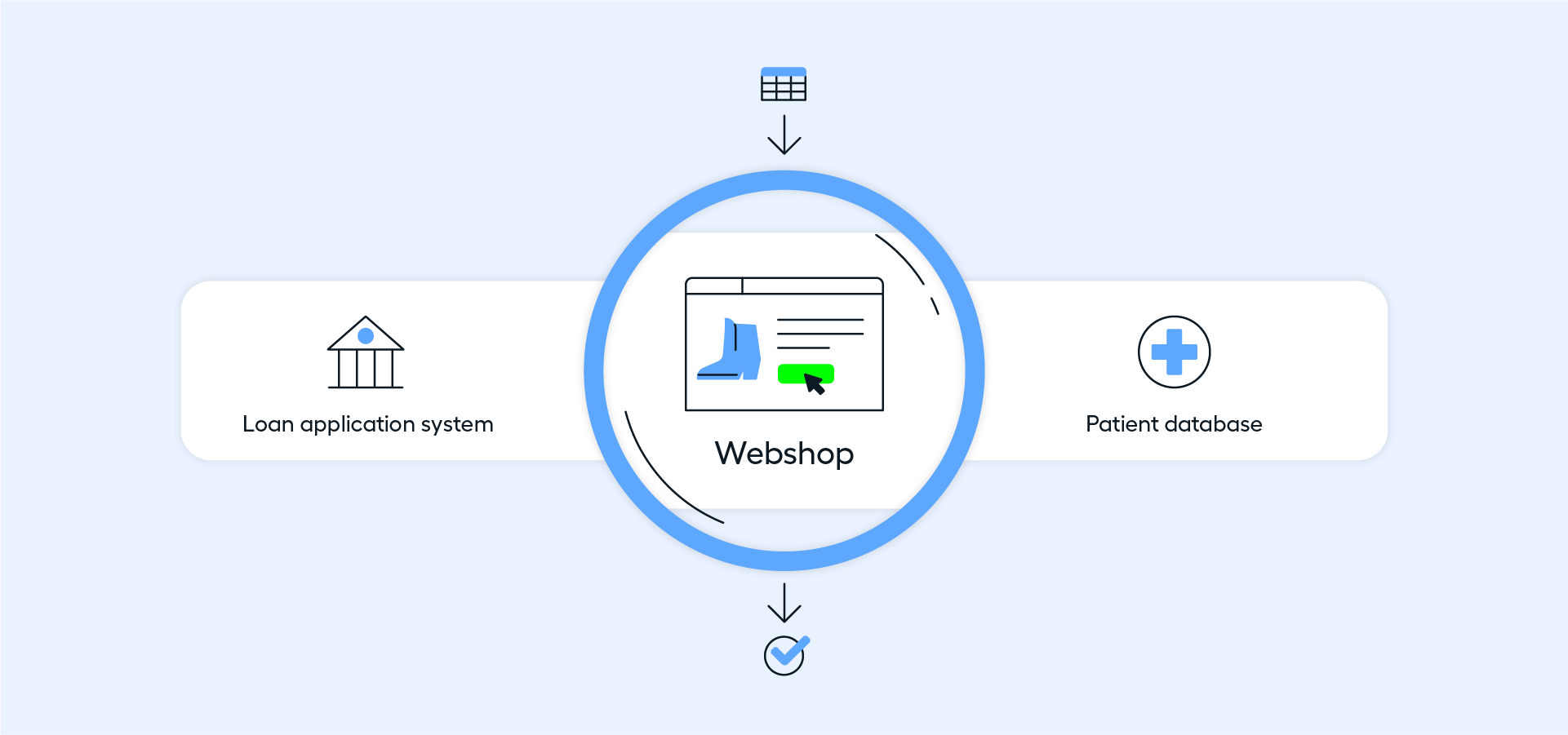

Consider a scenario where you need to automate testing for a web application with various input fields, such as an online registration form or a loan application system. For simple, isolated tests, you might manually code the input values directly into your test scripts. While this works for small-scale testing, it becomes impractical for testing at scale.

Example 1: Loan application system

For instance, in a loan application system, you need to test different combinations of loan amounts, interest rates, and repayment periods. Manually hardcoding these values quickly becomes overwhelming and error-prone, especially when you have to cover a wide range of best-case, worst-case, and edge scenarios.

To solve this, you can store all your test data in a spreadsheet or a database. Your automated tests can then dynamically read the input values from this data source. Using data-driven testing in this way allows for more manageable and scalable testing processes.

Example 2: Webshop

Another example is testing an online shopping cart, where you can store various combinations of product quantities, discount codes, and payment methods in a spreadsheet. Your automated tests will pull these values from the spreadsheet and run through all possible scenarios, ensuring thorough testing without the need to manually update the test scripts for each new set of data.

Example 3: Patient database

A last example use case could be testing a healthcare application that processes patient information. Here, you can document different patient profiles, medical histories, and treatment plans in a centralized data source. The automated tests will use this data to simulate various patient scenarios, ensuring the application handles all possible cases correctly.

By leveraging data-driven testing in these ways, you streamline the testing process, making it more efficient and effective, regardless of the complexity or scale of the application.

Data-driven testing with Leapwork

Choosing Leapwork for data-driven testing offers significant advantages, primarily due to its user-friendly, no-code platform. The drag-and-drop interface simplifies test creation and management, making it accessible to users without technical backgrounds. Leapwork supports various data sources like Excel, CSV, and databases, allowing easy integration of existing data. This flexibility, combined with the ability to reuse test components, enhances efficiency and reduces redundancy.

Leapwork also excels in scalability and comprehensive reporting. It can handle complex data sets and integrates seamlessly with CI/CD pipelines, supporting DevOps practices. Detailed reporting and analytics provide insights into test performance, facilitating quick issue identification and resolution. These features, along with robust error handling and collaboration capabilities, make Leapwork a cost-effective solution that enhances the accuracy and efficiency of your testing efforts.

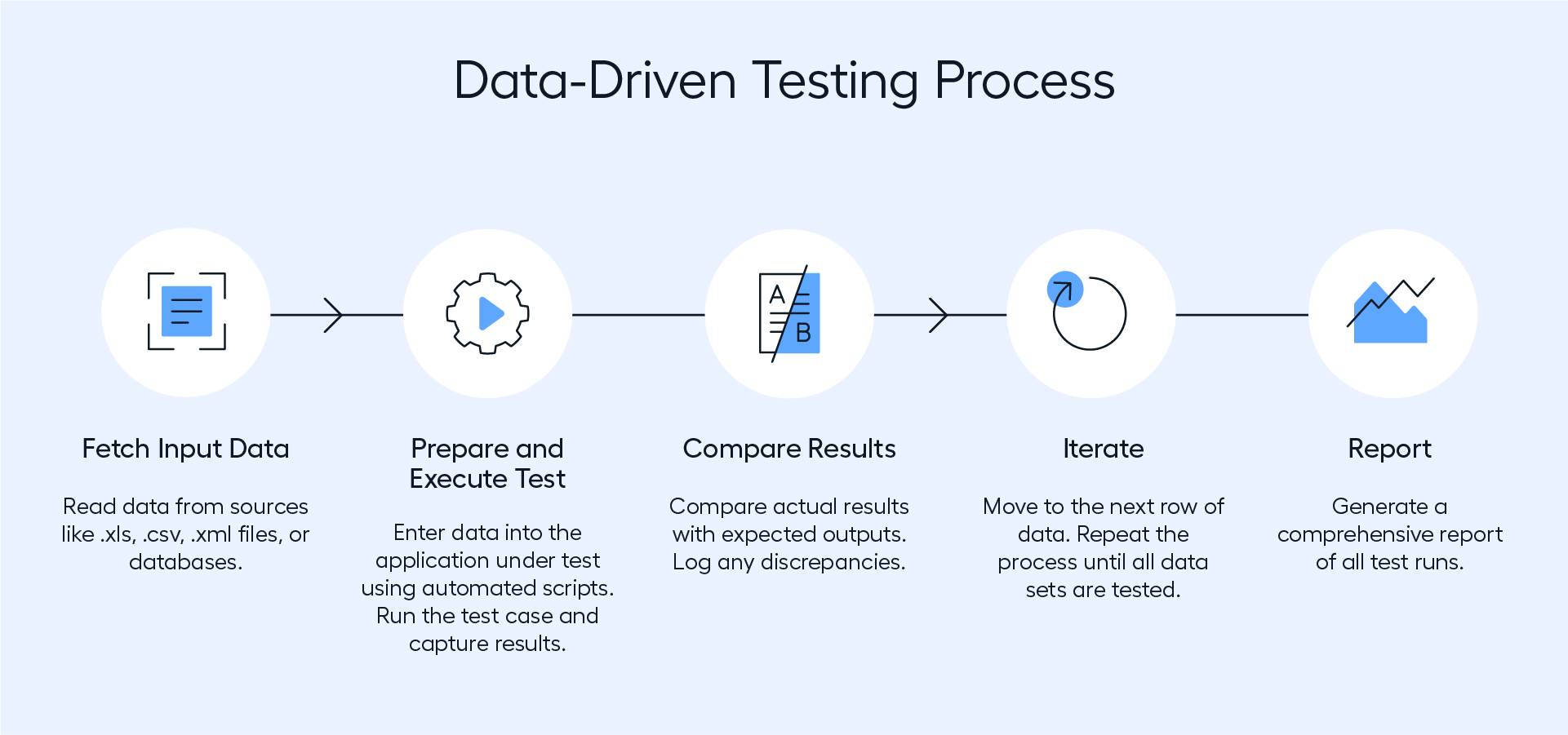

Here’s how data-driven testing works in Leapwork’s test automation platform:

- Create a test case: Start by designing a test case in Leapwork's visual, no-code interface. Drag and drop building blocks to create the test flow. This could involve steps like opening a web page, filling out a form, and verifying results.

- Define data source: Choose your data source. Leapwork supports various data sources such as Excel spreadsheets, CSV files, databases, or any other structured data format. Import the data you want to use for testing. You can also make use of Leapwork’s Generative AI capabilities for test data generation directly in your test cases (read more below!).

- Parameterize test case: Replace hard-coded values in your test case with parameters. These parameters will be filled with data from the data source during test execution.

- Connect data source to test case: Link the data source to your test case. In Leapwork, this is done by mapping the data fields from your source to the parameters in the test case.

- Configure data-driven loop: Set up a data-driven loop in Leapwork. This loop will iterate through each row of data in your source, executing the test case with the corresponding data set. This allows the same test logic to run multiple times with different data inputs.

- Execute tests: Run the test case. Leapwork will automatically execute the test for each data row in the data source, ensuring consistent and repeatable test execution with different data sets.

- Analyze results: Once the tests are executed, review the results in Leapwork's dashboard and video log. The results will show which data sets passed or failed, allowing you to identify any issues related to specific data inputs.

- Report and optimize: Generate reports from the test results to share with stakeholders. Use these insights to optimize your testing process, making adjustments to test cases or data as needed.

Generating data with AI for data-driven testing

Leapwork has introduced a revolutionary approach to managing test data generation. With the Generate AI capabilities embedded in Leapwork's test automation platform, you can create data directly within your test automation workflows. There’s no need to exit your automated testing environment to generate data. Simply specify the exact data you require, and the system will generate unique data that meets your specifications each time the test is executed.

Additionally, if you need to transform data, such as masking production data for testing purposes, Leapwork offers Transform AI capabilities. This feature ensures privacy by allowing you to modify data in real-time.

Furthermore, if you need to select specific data within a dataset, you can utilize the Extract AI capabilities. For instance, if your dataset includes fields like First Name, Last Name, Phone Number, and Email, but you only need the First and Last Names, you can specify this requirement, and it will automatically extract the specified data.

Watch this demo to learn about Leapwork's AI capabilities for data-driven testing: